Learning Infrastructure as code with Terraform: My Experience with the Cloud Resume Challenge

In this blog post, I'll talk about the Cloud Resume Challenge and my experience with it. I have been a Systems Administrator for eight years now mostly working with on-premise infrastructure, and in my current job, the past three years have been working in a hybrid cloud environment with some GCP, AWS, and exposure. I have been looking for an opportunity to expand my skillset into the Cloud Computing space, and to expose myself to new challenges. Along comes the Cloud Resume Challenge, I found out about the challenge from this site https://learntocloud.guide/docs/Welcome and was anxious to dive right in as it presented a great opportunity to do a little bit of everything that a typical Cloud Engineer role does.

Cloud Resume Challenge basics

The Cloud Resume Challenge itself was created by Forrest Brazeal. Forrest hosts a Discord channel where you can communicate with other challenge participants, receive help, get career advice, and submit your code to get reviews. The premise of the challenge is to build a static website on the cloud provider of your choice to host your resume along with a visitor counter. Sounds pretty simple right? So I thought! The Cloud Resume Challenge is a create-your-own-adventure type of challenge in which there is no right or wrong way to deploy your cloud-based resources on the cloud provider of your choice. The intent is to build many of the skills and use technologies that Cloud Engineers use on a day-to-day basis. I chose AWS as the cloud provider where will be deploying my static website and infrastructure. There are 16 steps of the challenge provided here: https://cloudresumechallenge.dev/docs/the-challenge/aws/ that are broken up into four chunks to make it manageable to tackle and learn at your own pace. These steps have all been well documented and analyzed by other previous challenge participants so I won't go too much in-depth along those same lines. What I do want to do is to document how I took each step of the challenge and turned it into Infrastructure as Code using Terraform to make the backend and frontend fully automated and repeatable.

Challenge Objectives:

Obtain the AWS Cloud Practitioner Certification.

Write a format of your resume in HTML & CSS.

Deploy your resume as an S3 static website, HTTPS and Amazon CloudFront.

Point a custom DNS domain name to your CloudFront Distribution.

Add a visitor Counter to your website using Javascript.

Set up a DynamoDB database to log the visitor counter.

Create an API to communicate your javascript code with DynamoDB.

Create a Lambda function using Python, triggered by your API Gateway, that reads from the DynamoDB table and updates the visitor counter. Create tests for your Python code as well.

Write it all as Infrastructure as Code.

Set up two GitHub repositories, back-end and front-end, for your code and use GitHub actions to automate updates and deployment.

Finally, create a Blog Post about your challenge.

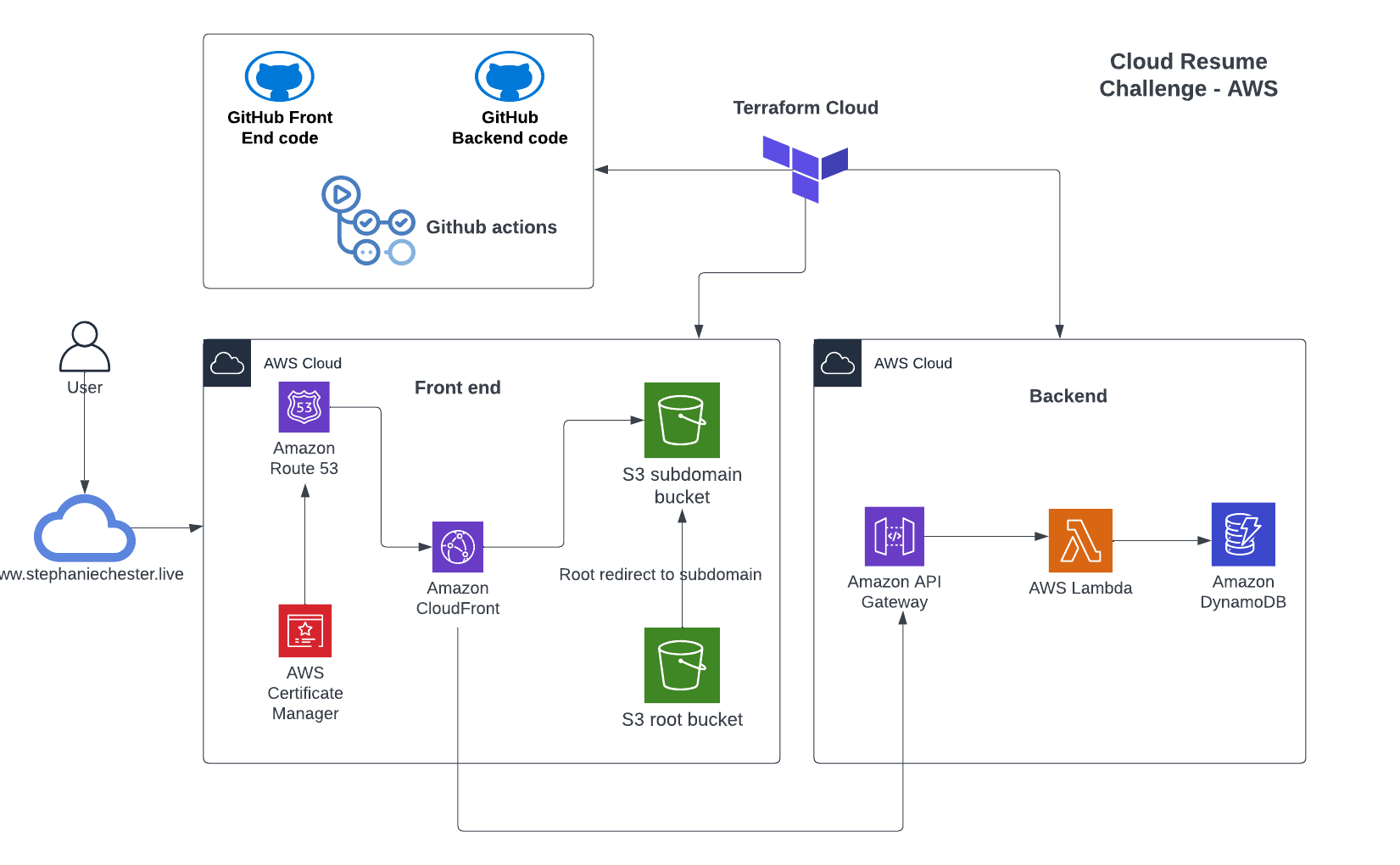

Infrastructure Diagram

My website: https://www.stephaniechester.live/

GitHub Front End repo: https://github.com/Stephanie-Chester/Cloud_Resume_FrontEnd

GitHub Back end repo: https://github.com/Stephanie-Chester/Cloud_Resume_Backend

Let's Get Started

As I mentioned previously challenge is broken up into four chunks. Starting Chunk 1 I was already in the progress of studying for the AWS Certified Cloud Practitioner exam, which happened to be a recommended prerequisite for the challenge. I sat for the exam in February 2023 and passed! Great start so far.

To start, as recommended by Forrest I set up AWS organizations and additionally created new accounts in each OU, one for a development environment and one for production. Then, I started on my resume site. I have some web design experience already but I preferred not to put too much focus on this part so I found a nice Bootstrap template I liked and just edited it where I saw fit. I have to admit I got very much bogged down in the editing part for a few weeks with polishing up my resume and listing all of my current and past work duties to ensure I don’t discount all that I have done in my current and previous jobs.

With my resume now being done, I logged into the AWS console and uploaded my resume website files into an S3 bucket I created for this challenge. I bought my domain name. I created a second bucket also to redirect the root domain to the subdomain. I made sure the S3 bucket permissions and IAM roles were good to go. Created an HTTPS certificate for my site in AWS Certificate Manager, set up CloudFront distribution for the website traffic, and additionally got Route 53 DNS configured.

Defining the Infrastructure with Terraform

While it is not a requirement of the challenge to use Infrastructure as Code to deploy the Front End and Back End it is recommended to further expand upon your learning experience. I have desired for some time now to learn Terraform so I decided I was going to change course and build the front end and back end in Terraform code. No better way to learn right than to do it with project-based learning right?

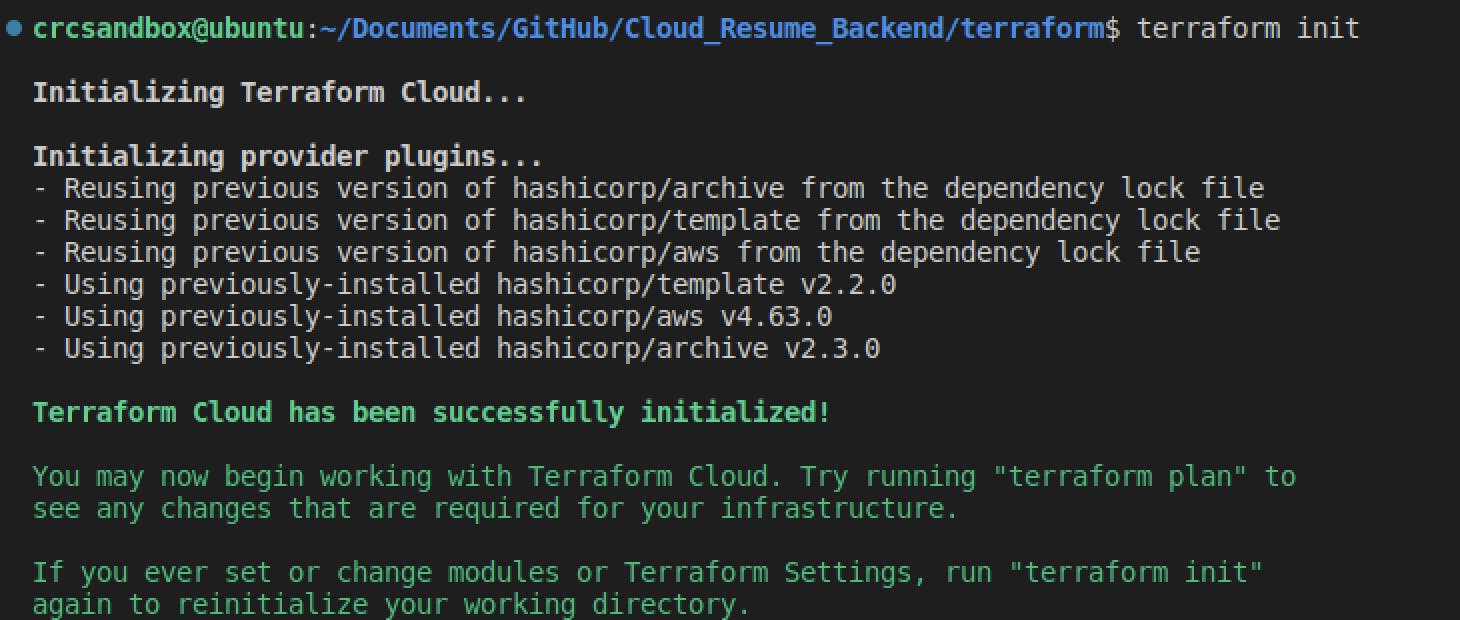

I left my initial setup where it was, and used my secondary AWS account to start from a blank slate. I could have attempted to use Terraform Import to import my existing resources but I wanted to learn from the ground up. Oh boy was this an adventure! Terraform Cloud is what I chose to house my state and resource management. I used the official Hashicorp Terraform documentation for AWS to get started and whenever I got stuck I referenced the Terraform Up and Running book by Yevgeniy Brikman along with various Google searches to assist with the many walls I ran into along the way!

I would say initially when I tried to set up my front-end resources using Terraform that I created all of the code for each resource for S3, Route 53, CloudFront in one go and then ran the initial 'Terraform init' command to initialize them. Needless to say that when I ran 'Terraform apply', I had a whole bunch of undeclared resource and syntax errors staring me in the face. That was kind of deflating to see, plus troubleshooting the mountain of errors. So I decided to research the best practices and found that 'Terraform fmt' and 'Terraform validate' go a long way in ensuring the code is correct. Also, I found adding code one resource at a time and then running 'Terraform apply' saves you from looking at a potential wall of errors. I would say after deploying the configuration with code over using the AWS console to manually create the resources is a pretty cool experience, to be able to set up the front-end with a simple 'Terraform apply' and then tear down with 'Terraform destroy' is pretty cool.

Bring on the back-end

Just as I did with the front-end resources, I also wanted to use Terraform to deploy the back end. This would prove to be a lot more challenging, but there is no stopping now, right? The most challenging parts of this section were the API Gateway, Lambda function, DynamoDB, Javascript, and Cross-Origin-Resource-Sharing (CORS).

I did not have any previous experience with Lambda functions before, DynamoDB, or API Gateway. Creating the DynamoDB resource in Terraform was relatively painless after I learned how DynamoDB tables worked, and I also referred back to the Terraform official documentation. Next up, create a Lambda function using Boto3 to communicate with the DynamoDB database to increment the visit counter. Thanks to AWS for their official Boto3 documentation on how to create the function: https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/dynamodb.html. This part was extremely challenging as I only had basic Python coding experience, I spent many nights playing around with the syntax and debugging errors. I learned a ton after this and am so proud of it. After adding the IAM permissions in Terraform code to allow the communication between Lambda and Dynamodb I was able to successfully see the visit counts incrementing in the Dynamodb table! I won't share the code here so it doesn't spoil it for future participants of the challenge who may come across my blog.

The API Gateway setup in Terraform was also another hurdle I had to encounter. My front-end website needed to have some Javascript code written to communicate with the backend API. Fortunately, with Stack Overflow and Chatgpt's assistance I was able to create simple Javascript code to post to the API to increment the visitor counter. Cross-Origin-Resource-Sharing (CORS) is something that needs to be accounted for with API Gateway otherwise the visitor counter will encounter errors when the API is called from the front-end. I found this handy blog post that helped me find the correct Terraform resources to deploy: https://mrponath.medium.com/terraform-and-aws-api-gateway-a137ee48a8ac. Also, AWS official documentation was great as well: https://docs.aws.amazon.com/apigateway/latest/developerguide/how-to-cors.html. I also needed to have in my Lambda code the appropriate CORS header for 'access-control-allow-origin' to allow communication from the back-end to the front-end.

Closing in on the finish line

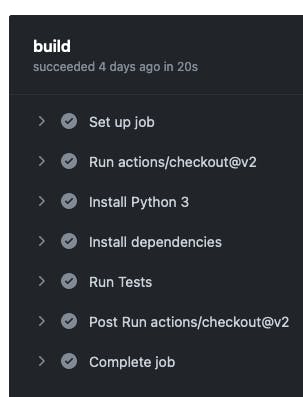

One of the two last requirements is version control for the front-end and back-end code, and CI/CD. For version control, I did this early on in the challenge and along the way so this box was checked. I used GitHub actions for CI/CD to automate updates and deployment for my front-end and back-end resources, along with unit testing of my Python code. As well as end-to-end website testing to ensure the API works as expected. I had no prior experience with Github Actions before the challenge so it took me a week or two to get familiar with each part of the requirements. I used the official Cypress GitHub action https://github.com/cypress-io/github-action to create a simple workflow for testing that the API and website are accessible. The official repo has a bunch of examples to use to craft a simple end-to-end test.

Once I got that working, it was onto several days of wrangling with Moto mock Python unit tests and the syntax to get something working that would communicate with a mock version of DynamoDB database. I found the official Moto resource Github repo very helpful with the code I needed: https://github.com/getmoto/moto. The code I added called the Lambda function with the mock table and checked that the result was expected.

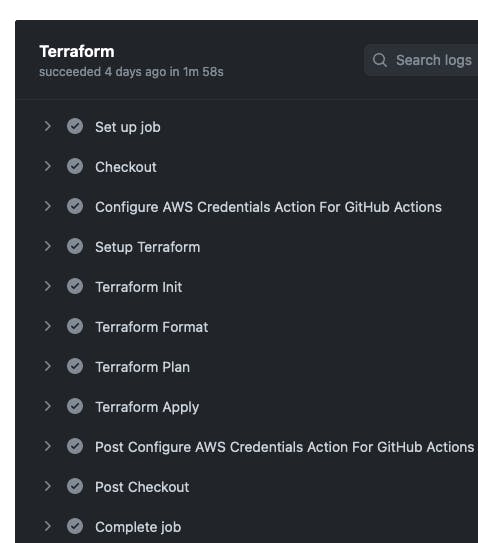

Last, but certainly not least with the CI/CD for the front-end and back-end. For the front-end I needed to ensure that any updates made to my static website were deployed to the S3 bucket, and also ensure the CDN cache was invalidated. Also, I set up a workflow additionally for Terraform, to push any changes to the AWS front-end and back-end resources if the code is modified.

The End or maybe not

Overall, the Cloud Resume Challenge has been a great learning experience, and I would recommend it to anyone looking to expand their Cloud Computing skillset. By turning each step into Infrastructure as Code using Terraform, I was able to make the backend and frontend fully automated and repeatable. I love a challenge and this certainly was it. Huge thanks to Forrest Brazeal, the one thing I love is how open-ended he left this challenge. There are so many directions you can continue to go with this challenge, and many mods you can continue make. I am not done with this project, I have more Devops mods in store I would like to do. I hope to continue to learn more in the Cloud Engineering space and work more on Python coding, and containerization.